Learning Affective Explanations for

Real-World Visual Data

-

Panos Achlioptas

Snap Inc. -

Maks Ovsjanikov

École Polytechnique

-

Leonidas J. Guibas

Stanford University

-

Sergey Tulyakov

Snap Inc.

Abstract

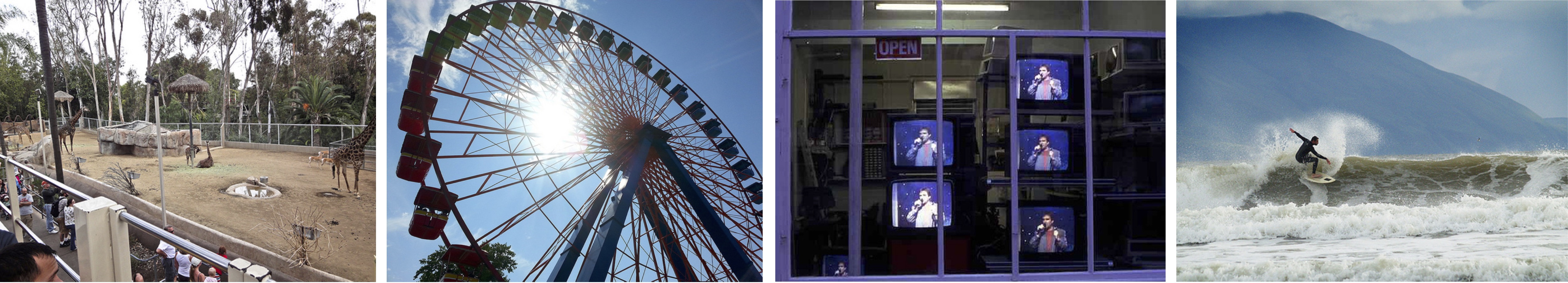

Real-world images often convey emotional intent, i.e., the photographer tries to capture and promote an emotionally interesting story. In this work, we explore the emotional reactions that real-world images tend to induce by using natural language as the medium to express the rationale behind an affective response to a given visual stimulus. To embark on this journey, we introduce and share with the research community a large-scale dataset that contains emotional reactions and free-form textual explanations for 85K publicly available images, analyzed by 6,283 annotators who were asked to indicate and explain how and why they felt in a particular way when observing a particular image, producing a total of 526K responses. Even though emotional reactions are subjective and sensitive to context (personal mood, social status, past experiences) – we show that there is significant common ground to capture potentially plausible emotional responses with large support in the subject population. In light of this key observation, we ask the following questions: i) Can we develop multi-modal neural networks that provide reasonable affective responses to real-world visual data, explained with language? ii) Can we steer such methods towards creating explanations with varying degrees of pragmatic language or justifying different emotional reactions while adapting to the underlying visual stimulus? Finally, iii) How can we evaluate the performance of such methods for this novel task? With this work, we take the first steps to partially address all of these questions, thus paving the way for richer, more human-centric, and emotionally-aware image analysis systems.

Summary of Main Contributions and Findings

- We curate and share Affection a large-scale dataset with emotional reactions to real-world images and explanations behind them in free-form language.

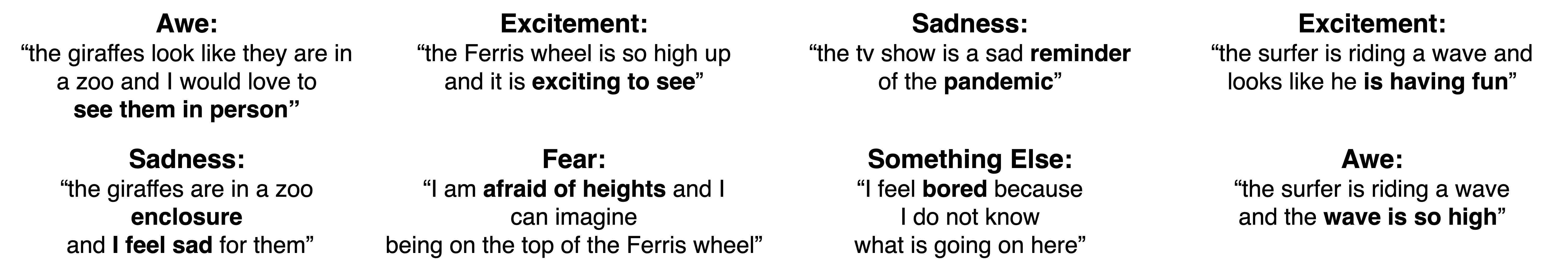

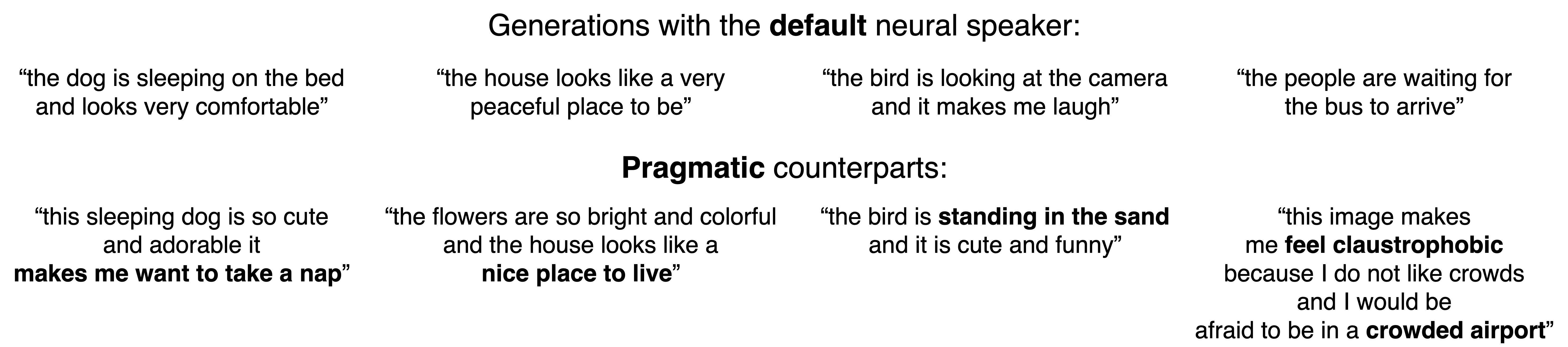

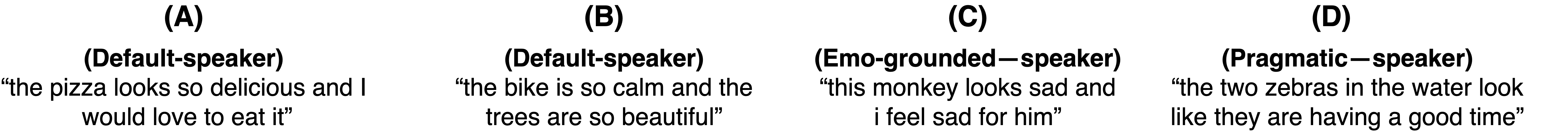

- We introduce the task of Emotional Explanation Captioning (EAC) and develop correspondingly neural speakers that can create plausible utterances to explain emotions grounded in real-world images.

- Our best neural speaker passes an emotional Turing test with a 65% chance. I.e., its generations are this likely to be thought of as if other humans made them, and not machines, as judged by third-party observing humans.

- Our developed neural listeners and CLIP-based studies indicate that Affection contains significant amounts of discriminative references that enable the identification of its underlying images from the affective explanations. Tapping on this observation, we also experiment and provide pragmatic neural speaking variants.

Qualitative Νeural Speaking Results

Video

The Affection Dataset

The Affection dataset is provided under specific terms of use. We are in the process of releasing it. If you are interested in downloading the data, first fill out the underlying form accepting the terms. We will email you once the data are ready.

Meanwhile, you can quickly browse a slightly filtered version of Affection's annotations!

Important Disclaimer: Affection is a real-world dataset containing the opinion and sentiments of thousands of people. Thus, we expect it to include text with certain biases, factual inaccuracies, and possibly foul language. The provided neural networks are also likely biased and inaccurate, similar to their training data. Their output, subjective opinions, and sentiments present in Affection are not byproducts that express the personal views and preferences of the authors by any means. Please use our work responsibly.Contact

To contact all authors, please use affective.explanations@gmail.com, or their individual emails.Citation

If you find our work useful in your research, please consider citing:

@article{achlioptas2022affection,

title={{Affection}: Learning Affective Explanations for

Real-World Visual Data},

author={Achlioptas, Panos and Ovsjanikov, Maks

and Guibas, Leonidas and Tulyakov, Sergey},

journal={Computing Research Repository (CoRR)},

volume={abs/2210.01946},

year={2022}}

Acknowledgements

P.A. wants to thank Professors James Gross,

Noah Goodman

and Dan Jurafsky for their initial discussions and the ample motivation they provided for exploring this research direction. Also, wants to thank Ashish Mehta for fruitful discussions on alexithymia, Grygorii Kozhemiak for helping design Affection's logos and webpage, and Ishan Gupta for his help building Affection's browser. Last but not least, the authors want to emphasize their gratitude to all the hard-working Amazon Mechanical Turkers without whom this work would be impossible.

Parts of this work were supported by the ERC Starting Grant No. 758800 (EXPROTEA), the ANR AI Chair AIGRETTE, and a Vannevar Bush Faculty Fellowship.